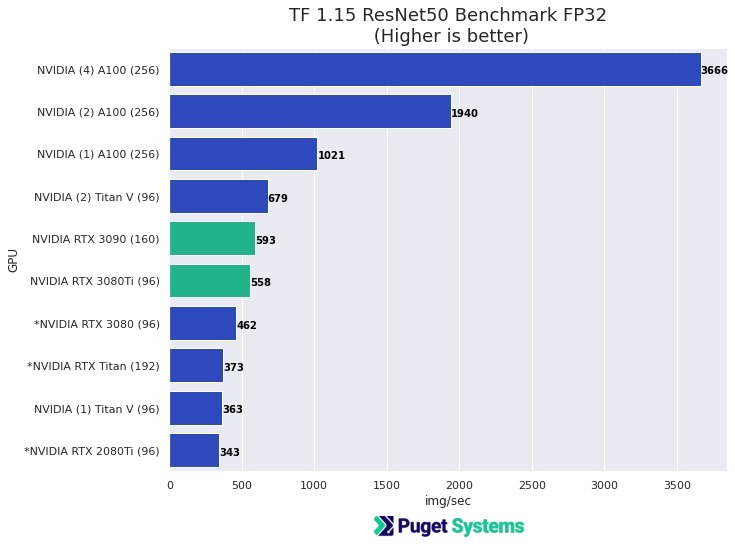

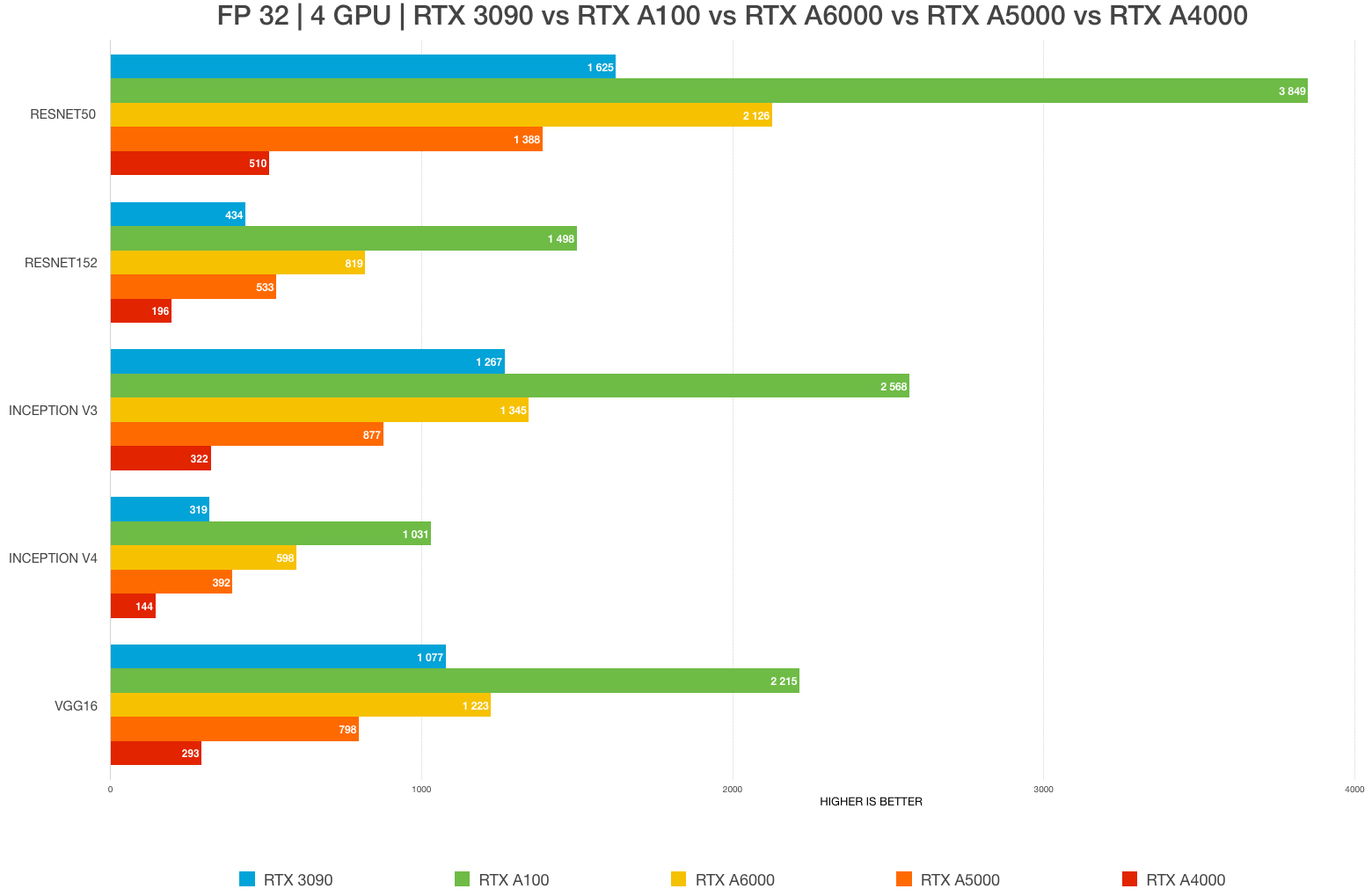

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

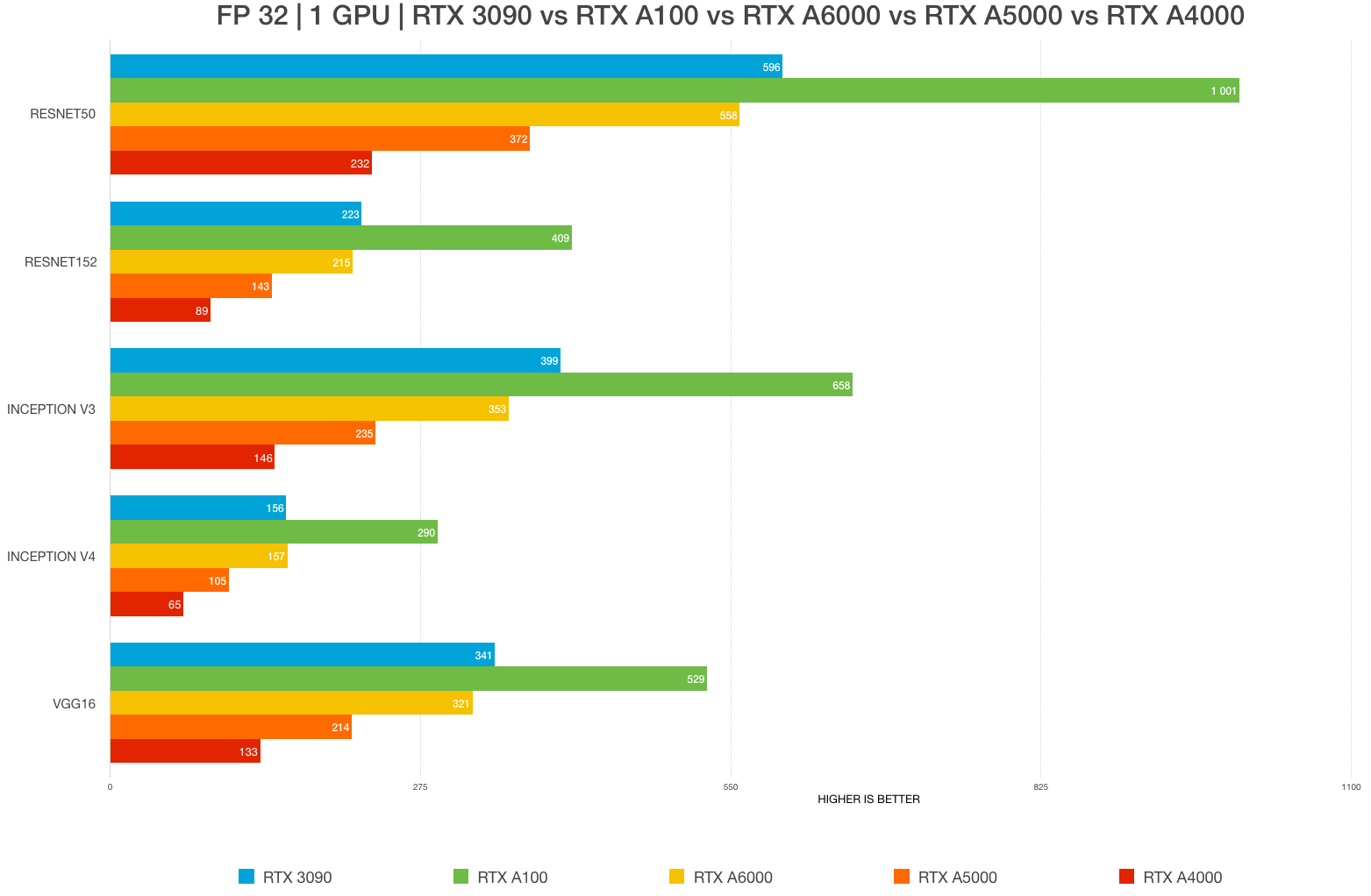

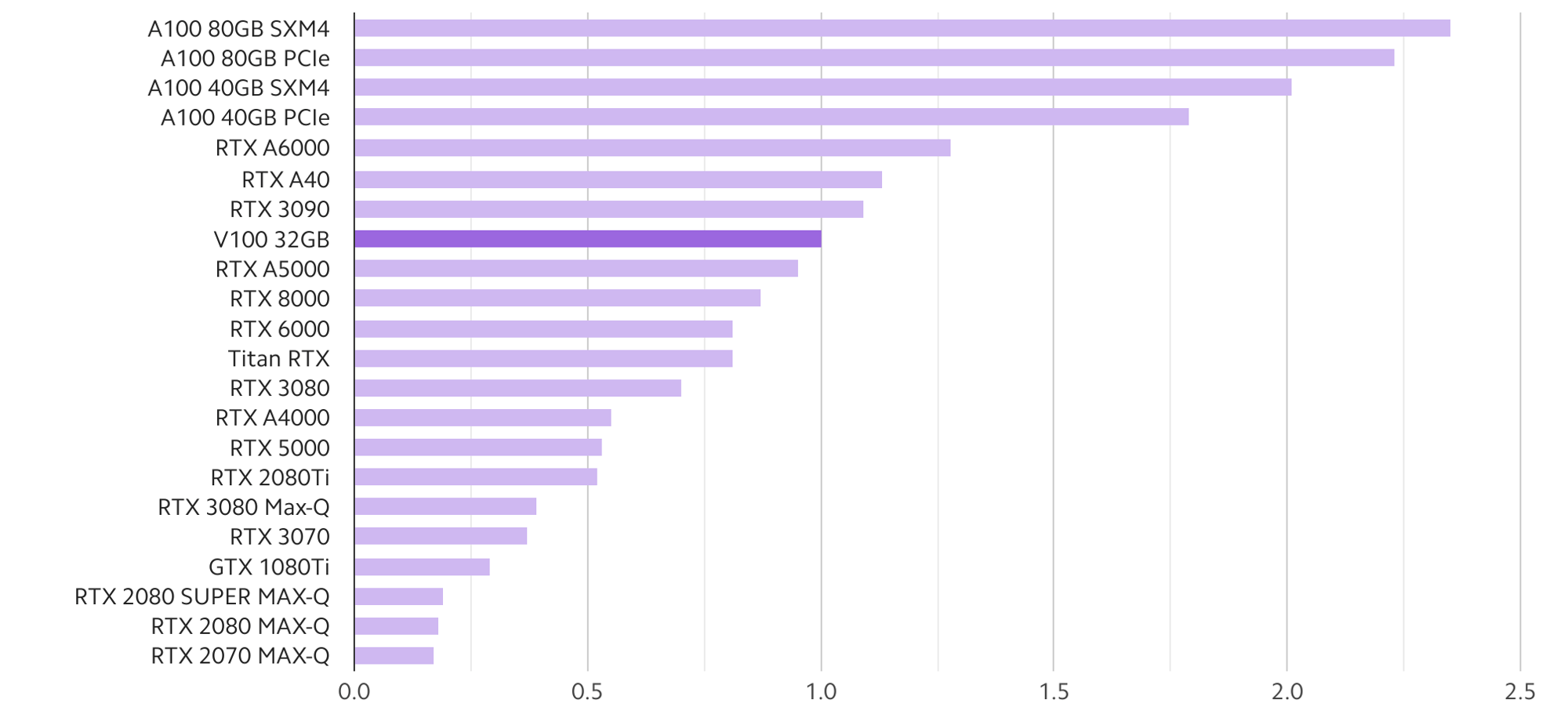

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

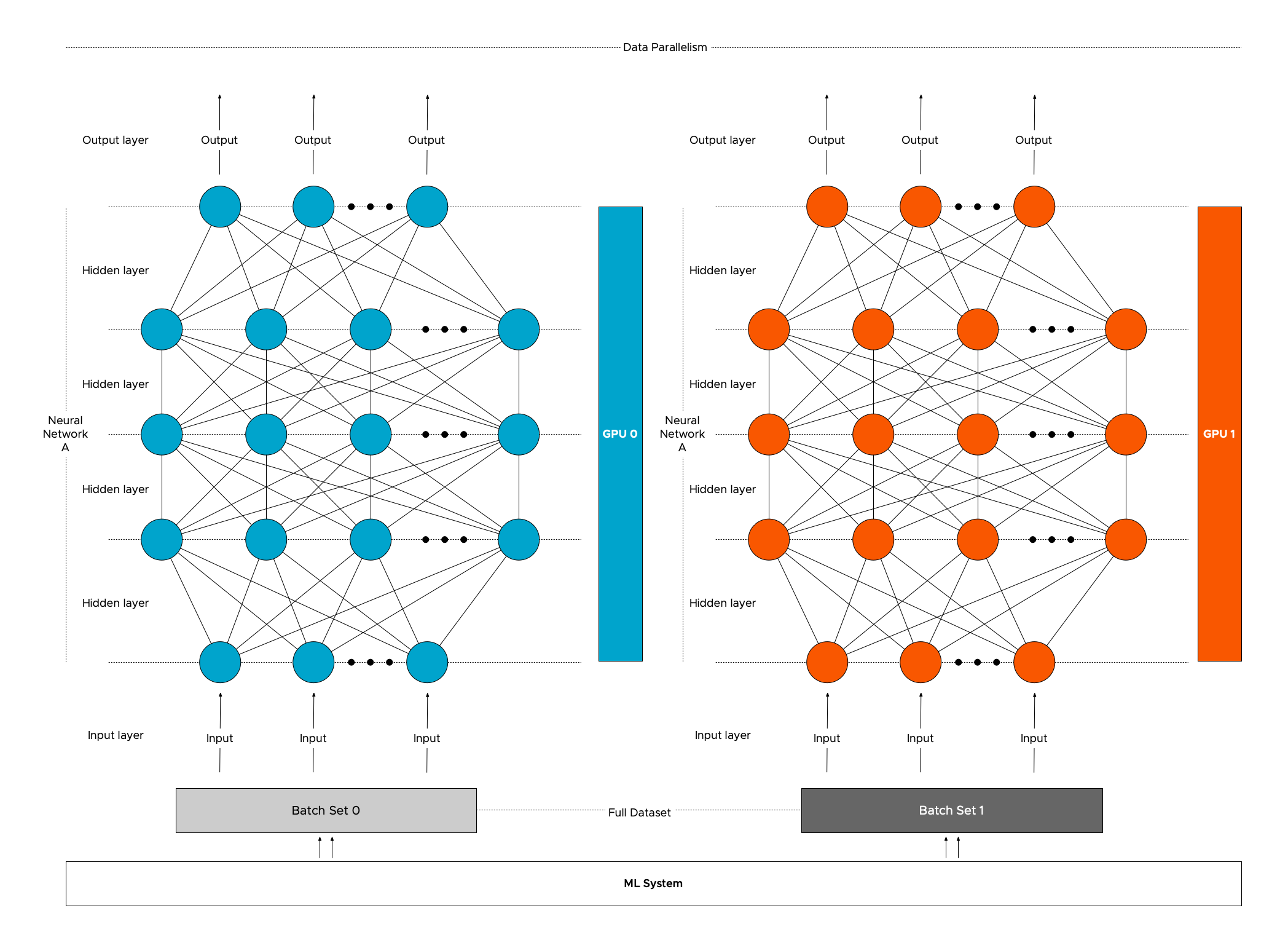

Optimizing Fraud Detection in Financial Services with Graph Neural Networks and NVIDIA GPUs | NVIDIA Technical Blog

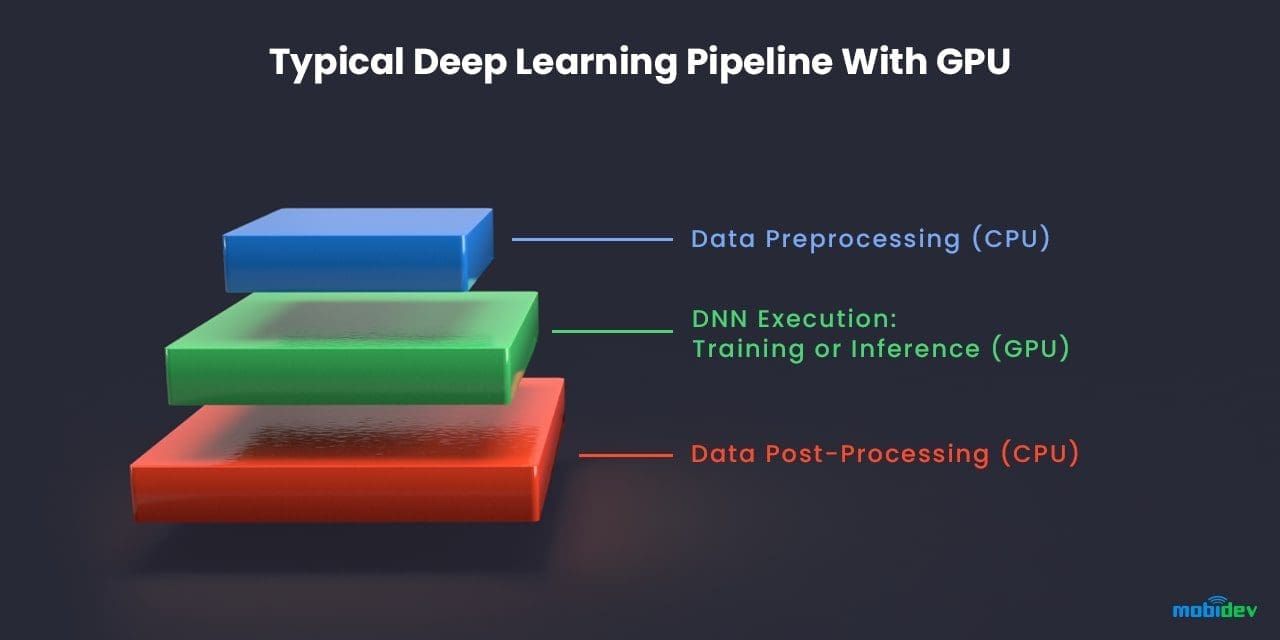

Setting up a Deep Learning Workplace with an NVIDIA Graphics Card (GPU) — for Windows OS | by Rukshan Pramoditha | Data Science 365 | Medium